, 5 min read

Speeding-Up Software Builds: Parallelizing Make and Compiler Cache

Original post is here eklausmeier.goip.de/blog/2015/05-14-speeding-up-software-builds-parallelizing-make-and-compiler-cache.

1. Problem statement

Compiling source code with a compiler usually employs the make command which keeps track of dependencies. Additionally GNU make can parallelize your build using the j-parameter. Often you also want a so called clean build, i.e., compile all source code files, just in case make missed some files when recompiling. Instead of deleting all previous effort one can use a cache of previous compilations.

I had two questions where I wanted quantitative answers:

- What is the best

jfor parallel make, i.e., how many parallel make's should one fire? - What effect does a compiler cache have?

To the second question: As compiler cache I used Andrew Tridgell's ccache, which he wrote for Samba.

For these tests I used the source code of the SLURM scheduler, see slurm.schedmd.com. This software package contains roughly 1.000 C source code and header files (~600 C plus ~300 header files), comprising ca. 550 kLOC. My machine uses an AMD CPU FX 8120 (Bulldozer), 8 cores, clocked with 3.1GHz, and 16 GB RAM.

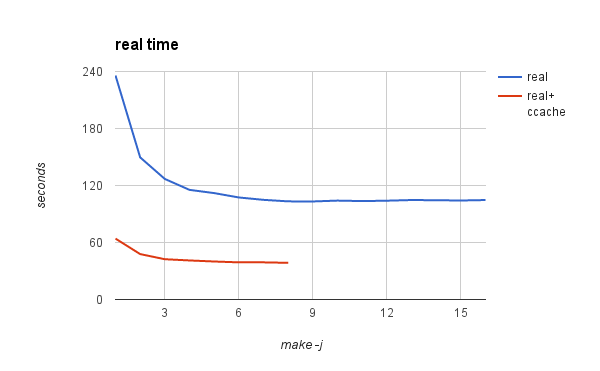

I went through the dull task of compiling the SLURM software with different settings of make, then cleaning up everything, and repeat the cycle. Below chart shows the results for varying j, once without compiler, and once with a compiler cache. Execution time is in seconds, time is "real" time as given by time command.

runtime for parallel make

runtime for parallel make

2. Conclusions and key findings

- Running more parallel make jobs than processor cores on the machine does not gain you performance. It is not bad, but it is not good either.

make -jwithout explicit number of parallel tasks is a good choice.- The C compiler cache ccache speeds up your compilations up to a factor of 5, sometimes even higher. There is no good reason not to use a compiler cache.

3. Raw numbers

Making all of SLURM:

tar jxf slurm-14.11.4.tar.bz2

cd slurm-14.11.4

./configure

time make

real 4m36.470s

user 3m24.248s

sys 1m12.379s

Between all compilations the result is cleaned:

time make clean

real 0m5.558s

user 0m2.014s

sys 0m3.912s

Now compiling and cleaning, going down from infinity, 16, 15, down to 1.

time make -j > /dev/null

real 1m44.970s

user 4m17.657s

sys 0m46.102s

time make -j16

real 1m44.144s

user 4m16.120s

sys 0m46.191s

time make -j16 > /dev/null

real 1m44.745s

user 4m16.242s

sys 0m46.358s

time make -j15 > /dev/null

real 1m44.231s

user 4m16.457s

sys 0m46.269s

time make -j14 > /dev/null

real 1m44.476s

user 4m15.833s

sys 0m47.091s

time make -j13 > /dev/null

real 1m44.675s

user 4m17.787s

sys 0m45.906s

time make -j12 > /dev/null

real 1m44.046s

user 4m16.554s

sys 0m46.575s

time make -j11 > /dev/null

real 1m43.612s

user 4m16.319s

sys 0m45.957s

time make -j10 > /dev/null

real 1m44.111s

user 4m16.999s

sys 0m46.181s

time make -j9 > /dev/null

real 1m43.239s

user 4m16.244s

sys 0m46.073s

time make -j8 > /dev/null

real 1m43.310s

user 4m15.317s

sys 0m46.257s

time make -j7 > /dev/null

real 1m44.913s

user 4m9.122s

sys 0m46.388s

time make -j6 > /dev/null

real 1m47.387s

user 4m1.811s

sys 0m46.165s

time make -j5 > /dev/null

real 1m51.977s

user 3m52.737s

sys 0m44.644s

time make -j4 > /dev/null

real 1m55.399s

user 3m37.683s

sys 0m44.401s

time make -j3 > /dev/null

real 2m6.940s

user 3m31.548s

sys 0m45.247s

time make -j2 > /dev/null

real 2m29.562s

user 3m15.105s

sys 0m45.061s

time make -j1 > /dev/null

real 3m55.786s

user 3m12.081s

sys 0m45.784s

Now the same procedure with ccache.

time make -j > /dev/null

real 0m38.625s

user 0m37.360s

sys 0m26.392s

time make -j8 > /dev/null

real 0m38.592s

user 0m36.810s

sys 0m26.214s

time make -j7 > /dev/null

real 0m39.086s

user 0m36.790s

sys 0m26.490s

time make -j6 > /dev/null

real 0m39.107s

user 0m36.447s

sys 0m26.119s

time make -j5 > /dev/null

real 0m40.034s

user 0m36.930s

sys 0m26.208s

time make -j4 > /dev/null

real 0m41.072s

user 0m36.400s

sys 0m26.573s

time make -j3 > /dev/null

real 0m42.400s

user 0m36.205s

sys 0m26.972s

time make -j2 > /dev/null

real 0m47.814s

user 0m37.186s

sys 0m27.551s

time make -j1 > /dev/null

real 1m4.060s

user 0m37.844s

sys 0m28.901s

Speed comparison for simple C file:

time cc -c j0.c

real 0m0.043s

user 0m0.034s

sys 0m0.009s

time /usr/lib/ccache/cc -c j0.c

real 0m0.008s

user 0m0.005s

sys 0m0.004s

Code of simple C file j0.c:

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

int main (int argc, char *argv[]) {

double x;

double end = ((argc >= 2) ? atof(argv[1]) : 20.0);

for (x=1; x<=end; ++x)

printf("%3.0f\t%16.12f\n",x,j0(x));

return 0;

}

Counting lines of code in SLURM:

$ wc `find . \( -name \*.h -o -name \*.c \)`

4. man page excerpt for make

-j [jobs], --jobs[=jobs] Specifies the number of jobs (commands) to run simultaneously. If there is more than one -j option, the last one is effective. If the -j option is given without an argument, make will not limit the number of jobs that can run simultaneously.